In Full...

A year ago, Ben Thompson made it clear that he considers AI to be an entirely new epoch in technology. One of the coolest things about new epochs is that people try out new ideas without looking silly. No one knows exactly what the new paradigm is going to look like, so everything is fair game.

The devices we carry every day have pretty much not changed for 15 years now. Ask a tweenager what a phone looks like and in their mind, it has likely always been a flat slab of plastic, metal and glass.

There are attempts to bring new AI powered devices that are accessories to phones to the fore – I wrote about the Ray-Ban Meta glasses last year – but it has taken until the past couple of months to see devices emerge that are clearly only possible within this new era.

Enter the Humane Ai Pin and the rabbit r1.

Both are standalone devices. Both have a camera and relatively few buttons. Both sport very basic screens (one a laser projector!⚡️) for displaying information to the user rather than for interaction. And both are controlled, primarily, by the user’s voice interactions with an AI assistant.

In theory, these are devices that you can ask to perform just about any task and they’ll just figure out how to get it done.

As a user, this sounds like the ideal scenario, the ultimate user experience. Issue a simple request and have it fulfilled without further interaction. The dream, like an ever-present perfect human assistant.

The Perfect Assistant

But let’s put aside the technology for a moment and figure out what we would expect of that perfect human assistant.

For the sake of this thought experiment they are invisible, always there and entirely trustworthy. Because we trust them, we would give them access to absolutely everything; email, calendar, messages, bank accounts… nothing is off limits. Why? Because they will be more effective if they have all of the same information and tools that we do.

So, with all of that at their disposal, the assistant should be able to solve tasks with the context of the rest of my life and their experience of previous tasks to draw on.

Simple Tasks That Are Actually Quite Complicated

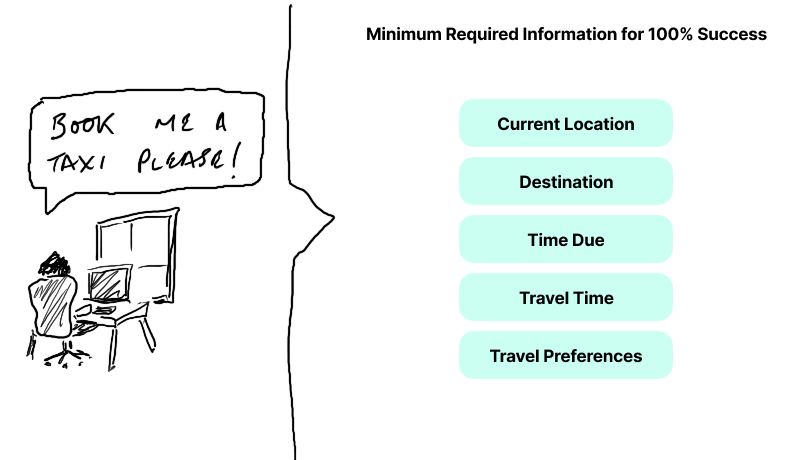

We’ll start with an easy task: “Book me a taxi to my next meeting”

The assistant knows where you currently are, so they know where to arrange the pickup from. And they have access to your calendar, so they know where the next meeting is, and what time it is. They can also look on Google Maps to check the traffic and make sure that you’ll be there on time. They know that you have an account with FreeNow, and prefer to take black cabs when you’re travelling for work. And so, when you ask them to book a taxi, they can do so relatively easily, and you will get exactly what you need.

Exclude one of those pieces of information though, and you will not necessarily end up with the desired result. And that’s for a relatively straightforward request.

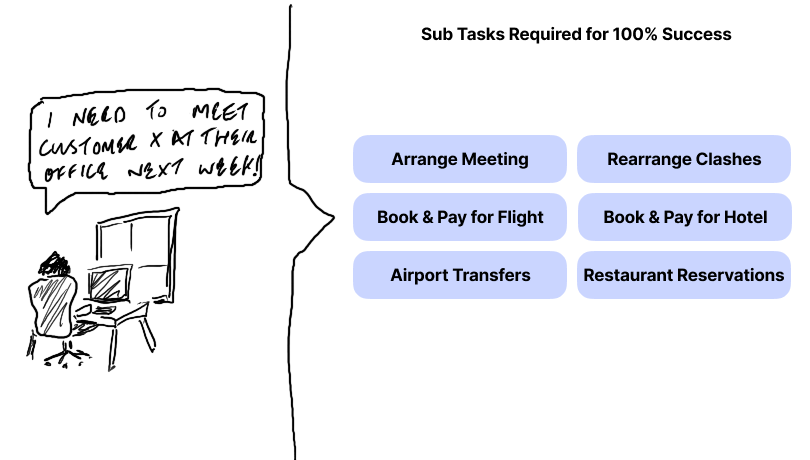

When you make the request more complex, the level of information and the variety of subtasks required becomes huge. “I need to meet with Customer X at their office next Wednesday”. If you’re in New York and your customer is in Austen, TX, there are flights to be arranged, hotels to be booked and transfers in between, not to mention the diary management that occurs.

These are also pretty normal requests of an assistant - things that happen the world-over every day but which are, when broken down, incredibly complex and made up of various interdependent subtasks. Each of them is important and if one of the subtasks fails, the entire task can go wrong.

The perfect assistant though, would be able to handle all of this without breaking a sweat, and we would rely on them because we trust in one of the hardest human traits to replicate: their judgement.

Enter the AI-ssistant

The inferred promise in the rabbit r1 keynote is that you will be able to say “I want to go to London around 30th January - 7th February, two adults and one child with non-stop flights and a cool SUV” and it will be able to plan, arrange and book the entire trip for you.

This, if it is true, is remarkable, precisely because of how complex and interlinked tasks actually are. If we remove the professional elements from the above example, the sub-tasks involved in booking a trip like this and the understanding required are still huge.

I think the r1 is a cool concept, but the hand-wavey elements of the keynote (”Confirm, confirm, confirm and it’s booked”) are alarming, precisely because those are the actually hard parts. Getting ChatGPT to spit out a travel itinerary is easy but actually having an AI that is able to follow through and execute properly on all of the required tasks is another matter.

Don’t misunderstand me, I fully believe that an AI could navigate a webpage and follow the process to select and pay for things. I can see in the keynote that the r1 has the ability to connect to Expedia and would bet that it can book a hotel on the site.

My quandary is that when I, as an actual human™, go onto Expedia to book the above trip, I’m presented with over 300 options just for the hotels. At the top hotel, there are 7 different room types with only a couple of hundred dollars in cost difference for the entire stay between the largest and the smallest. This is already complicated before I throw in personal taste.

Then once you throw in flights where the option that I’d likely choose based on my personal time preferences is actually 9th on the price-ranked list (which is still within $20 of the cheapest option) and I just don’t see how the r1 is ever going to give me what I actually want. I know what that is and I know that a human assistant who has gotten to know my preferences and proclivities would likely make the same choices as I would, but that’s because we both have that very human trait of personal judgement.

I can see how an AI who has had access to all of my past bookings may be able to detect patterns and preferences, but I also can’t see any evidence that the r1 does have that access, or ability to learn about me personally. I won’t comment on the humane pin, but I can’t see much evidence of that there either.

My feeling is that a “just” good assistant, one that is just able to follow your directions and get stuff done, is actually quite hard to replicate. Combine that with the traits of a great assistant, one that can anticipate your needs, with good judgement and potentially even solve problems before you ask and we’re just at another level of complexity.

It’s not that I’m bearish on AI assistants as a whole but I do think that the role of being an assistant is much more complex and personal than people imagine. I can’t wait to see where we end up with daily AIs that we interact with but I can’t help but feel that an assistant in this manner just isn’t it. Yes, help me sort through the cruft of those 300 hotels, but I don’t think I’ll trust an AI to make the call for me in the same way as I would a human, at least not anytime soon.>