Chop Chop!

- A Paradigm Shift in AI Reasoning: OpenAI’s o1 (Strawberry) model introduces built-in reasoning and self-correction, moving beyond the “one-shot” approach to improve accuracy.

- Self-Correction in Action: Unlike its predecessors, o1 employs a “Chain of Thought” mechanism to iteratively verify and refine its responses before presenting them to the user.

- Accuracy vs. Speed Trade-offs: While o1 achieves higher accuracy, its self-correction process significantly increases the computational cost and response time, posing challenges for user expectations of instant results.

- Toward Trustworthy AI: The evolution of models like o1 highlights a critical shift toward trustworthy, thoughtful AI—though true considered thought and learning from interactions remain distant goals.

- Reconciling Expectations: Balancing users’ demand for immediacy with the benefits of deliberate, accurate reasoning will be central to the future of AI-human interactions.

In Full...

Two weeks ago, I explored a basic method for error-checking the output of Large Language Models (LLMs). Just a week later, OpenAI unveiled their latest model, o1 (Strawberry)—the first frontier model boasting built-in reasoning and iterative correction. This isn’t merely another version update; o1 signifies a fundamental shift in how we conceptualize reasoning within AI models and how we engage with them.

The Evolution of AI Reasoning

The arrival of o1 is exciting for two primary reasons. First, it incorporates a “Chain of Thought” mechanism, effectively deconstructing user prompts into logical steps to generate more accurate answers. Second, it utilizes this chain of thought to verify its own outputs—a more advanced iteration of the error-checking approach I discussed in my previous article.

But what does this mean in practical terms? Ethan Mollick’s crossword example provides a clear illustration. When solving a crossword puzzle, multiple answers may fit a given clue, but only one will integrate with the rest of the puzzle. For instance, A red or green fruit that is often enjoyed pressed as an alcoholic drink (5 letters) could be either GRAPE or APPLE, but only one will align correctly with surrounding answers.

Most LLMs would generate GRAPE and move forward without re-evaluating its fit within the broader puzzle. In contrast, o1 excels by looping back, much like a human would, to recognize that GRAPE doesn’t fit and subsequently correct it to APPLE.

For the first time, AI responses undergo the “that doesn’t look right, are you sure?” “Yes, sorry, you’re right, the correct answer is APPLE” process, long familiar to regular LLM users, prior to being received by the user. Consequently, o1 and its successors are poised to deliver responses that are significantly more accurate than those of their predecessors.

AI’s Inability to Loop Back: A One-Shot Problem

The limitation of previous models to revisit and rectify errors is a fundamental flaw in AI. Once a model generates its first token (word), it sets a trajectory that cannot be altered, even if the answer becomes evidently incorrect midway through. By the time an error is clear there’s no mechanism in place to correct it, leaving users with incorrect information.

There was, however, a brief period when AI appeared to be self-correcting, albeit not for accuracy. When Ben Thompson described Sydney—Microsoft’s “sentient” and unpredictable AI with a distinct personality—a phenomenon emerged where models would start an answer, delete it halfway, and revise it to comply with safety checks.

So, why hasn’t self-correction for accuracy been standard? The primary obstacles are the significant costs in processing power and time. For instance, o1’s output token cost is quadruple that of its predecessor, 4o, due to the increased number of tokens processed and generated. In Mollick’s crossword experiment, the self-correcting model required a full 108 seconds to solve the puzzle—substantially longer than typical models. Users generally resist slow and expensive systems, favoring the “one-shot” approach where LLMs provide immediate answers despite potential inaccuracies.

Instant Answers

Reflecting on computing history, early machines like Turing’s Bombe and Tommy Flowers’ Colossus operated under the assumption that obtaining correct answers would take measurable processing time. The Bombe, for instance, took 20 minutes to crack an Enigma setting through sheer mechanical effort.

As technology advanced—silicon became faster, high-speed broadband became ubiquitous, and social media reshaped our expectations—we became accustomed to instant results. Marissa Mayer, as early as 2006, championed the gospel of speed, emphasizing its importance: a mere half-second delay in loading Google results led to a 20% drop in traffic and revenue.

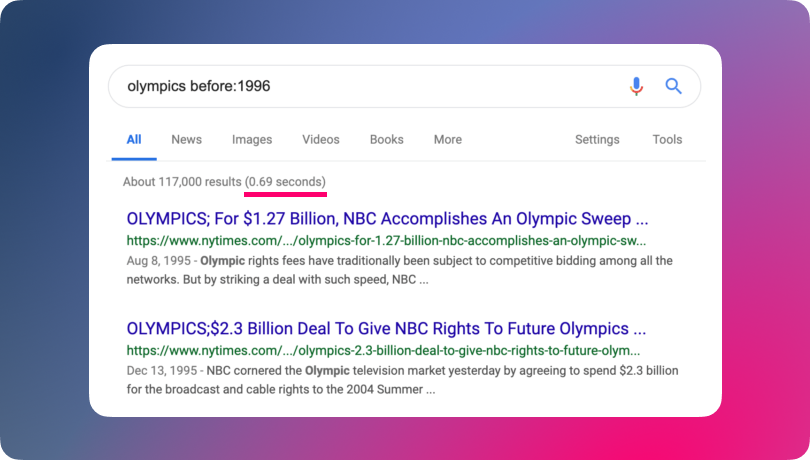

For years, Google’s search results demonstrated the value placed on speed: ten blue links showcased alongside a proudly proclaimed search time of “0.69 seconds.” We’ve come to expect immediate gratification from the web, making the idea of waiting for an AI’s answer feel counterintuitive.

When OpenAI launched the ChatGPT interface, one of its standout features was the immediate, letter-by-letter streaming of responses—a user interface now standard across all LLMs. This technique creates an illusion of speed, keeping users engaged even as the AI continues to generate its final thoughts. However, this approach has a drawback: if the AI begins streaming responses before fully processing the answer, there’s no opportunity to correct errors that may emerge later in the generation process.

Thinking, Fast and Slow

Humans are often advised to take their time when responding to questions. Whether in interviews, tackling complex problems, or strategic planning, deliberate and reasoned thought typically yields better outcomes. Even individuals who seem to answer questions instantly have usually spent considerable time formulating their thoughts in advance.

Many readers will be familiar with Daniel Kahneman’s Thinking, Fast and Slow—the 2011 book that introduced his thesis of the dual systems of human cognition:

- System 1: Handles fast, automatic, and unconscious thinking

- System 2: Manages slow, deliberate, and conscious reasoning

Most current large language models (LLMs) operate primarily within the realm of System 1—they generate responses quickly and effortlessly, akin to off-the-cuff remarks.

Even with advancements like the o1 model, the process still resembles a System 1 response. Instead of engaging in true System 2 thinking, o1 appears to internally repeat and refine its initial System 1 output until it arrives at what it believes is the correct answer. This suggests that while o1 can self-correct, it’s still fundamentally reliant on rapid, surface-level processing rather than deep, reasoned thought.

One might argue that System 2 thinking in AI could manifest as error correction and analysis of System 1 outputs. However, the sheer volume of output tokens required indicates that the model is effectively “thinking out loud” internally, even if these thoughts aren’t explicitly shared with the user. This internal vocalization mirrors how humans might silently debate and refine their answers before speaking, but it doesn’t reflect the considered evolution of a thought over a significant period.

Considered thought is something that LLMs, as they currently operate, are simply not capable of. Even at the level of o1, they are trained to a cutoff point and don’t learn from interactions with users, nor do they have “defined” thoughts built up over time on any subject. The same question phrased in two different ways could yield two vastly different responses because the AI is considering that question—and vitally its phrasing—for the first time, even on its ten-thousandth repetition.

Considered Thought

Could LLMs achieve considered thought akin to a human, though? This ventures into the realm of discussing Artificial General Intelligence (AGI)—a topic I prefer to sidestep for now. However, we must contemplate what future models might look like and whether they could be said to have “deliberated” over a topic.

A model that continuously learns from interactions and previous answers is the most likely candidate for exhibiting “considered thoughts.” The advent of such a model, however, remains distant, as it is prohibitively expensive in terms of time and compute (and, undoubtedly, financial resources) to regularly, let alone continuously, retrain a model. Nevertheless, it seems likely that this will be the eventual evolution of these models, with the ability to learn from all past interactions and build upon previous answers.

Until then, we’re likely to be left with models that use processes like o1 to imitate reasoned thought, checking and refining their responses before delivering them to the user.

Balancing User Expectations of Instantaneity with Correct Answers

Herein lies a profound challenge: users expect instant, accurate results, but by their very nature, LLMs achieve their highest accuracy when they don’t respond immediately. How do we reconcile these conflicting expectations?

We could allocate more processing power to make the system appear faster, but the cost implications are substantial. Alternatively, can we educate users to understand that AI requires “thinking time”? While instant responses align with user expectations shaped by the internet’s speed, there’s a growing imperative to prioritize accuracy and depth of reasoning to ensure that the responses delivered by LLMs are trustworthy and dependable.

The Dawn of Trustworthy AI

o1;s capacity for reasoned thought and self-correction goes beyond merely improving the outputs previous models—it marks a significant stride toward creating genuinely intelligent systems capable of partnering with us in addressing the complex challenges of our world.

As AI models advance towards incorporating internal reasoning and self-correction, we need to consider how we can manage user expectations and ensure they understand that the correct answer isn’t necessarily the immediate one. Balancing the innate human desire for instant results with the necessity for accurate, thoughtful responses will be paramount. By valuing deliberative processes alongside speed, we will start to see AIs applications that can be trusted to actually deliver on AI’s early promise.

Why It Matters...

The current generation of LLMs works well in low-stakes environments where high trust isn’t essential, but they still need significant human oversight. Models like o1 change that. With their ability to handle more complex tasks and self-correct, these models open the door to trusting AI in critical roles. This shift could save companies money, reduce errors, and unlock new possibilities by allowing AI to take on more responsibility and deliver reliable results in challenging scenarios.