In Full...

Media speculation has been swirling around the idea that Apple is lagging behind in the AI race.

The headline advances in AI over the past couple of years have been generative technologies, and if you’re comparing chatbots then, yes, Siri looks like ancient technology. Apple’s approach to AI though has been to infuse it throughout iOS, with the goal of incrementally improving the user experience throughout without highlighting to users just how much of the heavy lifting the AI is actually doing.

This is consistent with the Steve Jobs playbook: “You’ve got to start with customer experience and work backwards to the technology. You can’t start with the technology.” Apple aren’t focused on making AI the centrepiece of their product launches and in fact have previously gone out of their way to avoid using the term, instead preferring the use of “Machine Learning” to describe the technologies that underpin the experience, but if you look around iOS it is omnipresent.

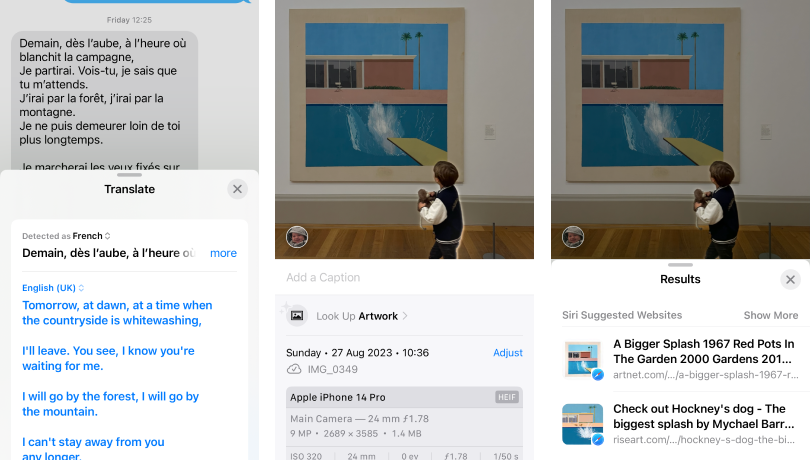

Features that rely on Artificial Intelligence have been integrated throughout iOS: Cameras produce a quality of image far beyond what is achievable solely with the tiny optics fitted to the phone. Messaging has translation baked in. And swiping up in Photos gives options to look up the Artwork (or Landmark, Plant or Pet) shown in the image.

The approach that Apple takes is quite different from that of other manufacturers – these improvements aren’t flagship features, but relatively subtle improvements to the overall product experience that work towards that overarching goal of improving the customer experience.

Generative Hype

On Thursday, during an earnings call, Tim Cook addressed the question of AI directly, stating:

As we look ahead, we will continue to invest in these and other technologies that will shape the future. That includes artificial intelligence where we continue to spend a tremendous amount of time and effort, and we’re excited to share the details of our ongoing work in that space later this year.

Let me just say that I think there’s a huge opportunity for Apple with Gen AI and AI, without getting into more details and getting out in front of myself.

This is the most direct indication that the company is looking to bring more generative AI features to iOS. What form that will take is still speculation, but we can assume that one area that will be addressed is Siri.

What is Siri?

To most, Siri is Apple’s slightly confused voice assistant.

Whilst this part of Siri desperately needs improvement in the context of the other services that users have become familiar with, I think that it’s unlikely that we’ll see Apple release a chatbot with the freedom that GPT (or Bing) had. I just can’t see a world where Apple allows Siri to tell users they are not good people.

Apple has an additional challenge in their overall approach: Their privacy-focused stance has seen more and more of their ML tasks performed on-device, and Apple’s long-term investment in the dedicated Neural Engine core (first introduced in 2017) has demonstrated that their strategy is very much focused on doing as much as possible without leaving the device. This results in some limitations in both the size and quality of the model that underpins Siri – what ChatGPT achieves running in a Microsoft data centre, Siri needs to within the phone.

The slightly lacklustre voice assistant isn’t Siri though. Voice is simply one of the interfaces that allows users to interact with Siri. Look for Siri elsewhere and you will start to see that Apple considers Siri to be almost everything that is powered by AI.

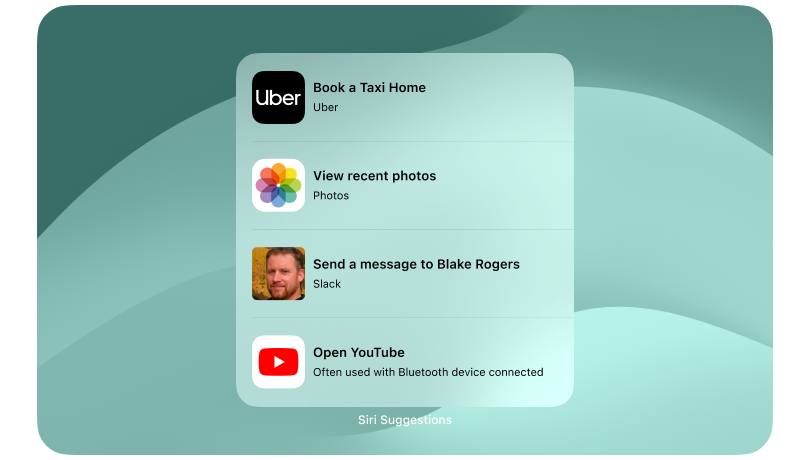

When widgets were introduced in iOS 14, Apple included one particular widget which I think hints at the direction of Apple’s eventual longer-term AI integration: Siri Suggestions.

The widget is actually two types of widget; a curated selection of 8 apps that change based on context and location, and a set of suggested actions based on what Siri anticipates you will want to do within apps, again based on your context. Whilst I think both are brilliant, and I use both types on my own home screen, it is the second that I think gives the best indication of where Apple’s AI strategy is heading.

Apple provides the ability for apps to expose “Activities” to the wider operating system. Whether that is sending a message to a specific friend, booking a taxi or playing the next episode of a show, each activity is available to the widget without needing to go into the app to find it.

Within the widget, Siri then presents what it thinks are the most relevant activities for a given time and place. Arrive at a restaurant at 8pm and don’t look at your phone for two hours? Don’t be surprised if the top suggestion when you do is to book a taxi back home. Usually call your parents at 7pm on a Sunday? Expect a prompt to call them to appear. The ability for Siri to combine the contextual clues that the operating system has, its data on your historical patterns and the activities available to it allows it to have the unique ability to accurately predict what you want to do.

The most notable element is that the focus here is on actions using apps rather than on the app itself. This returns us to the primary driver of good user experiences; helping the user to achieve their desired outcome in the easiest possible way.

Actions, Activities and App Clips

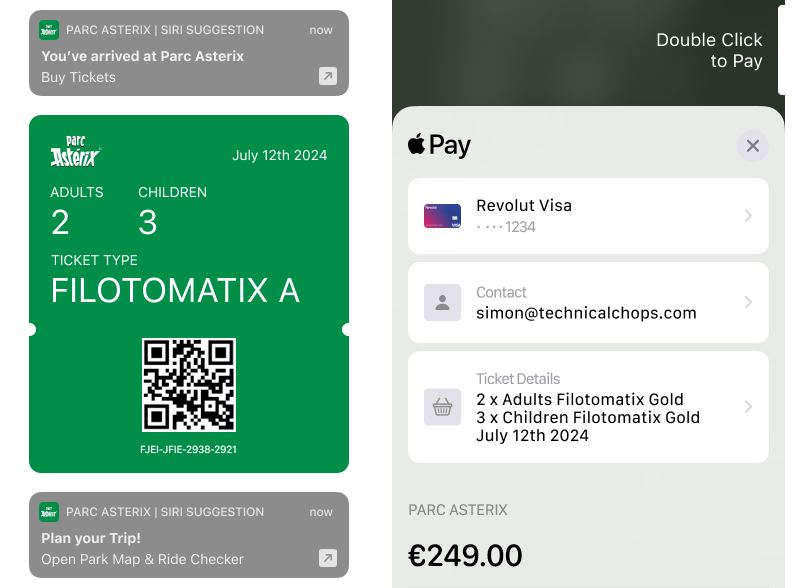

Given that many people have spent the past decade using a smartphone, it is not uncommon to have hundreds of apps installed, most used extremely rarely. I, for some reason, still have an app for Parc Asterix installed despite last visiting for just one day nearly 4 years ago.

We’re moving away from the days of “There’s an app for that” and into the days of “Why do I have to download an app to do that?”. Apple’s solution, introduced in 2020, is App Clips.

App Clips are a way for developers to provide access to features of their app to a user without having them download the full app. They’re often contextual – a restaurant could provide access to their menu, ordering and payment via an App Clip via a code or NFC tag on a table. In Apple’s words: “App Clips can elevate quick and focused experiences for specific tasks, the moment your customer needs them.”

Whilst I’ve rarely seen App Clips used in the wild, I sense that this is another example of Apple moving pieces into place as part of their future strategy.

Fewer Apps, more Actions

By encouraging developers to provide access to specific actions or features within an installed app or via an App Clip, Apple has created an environment for their devices in which Siri can provide users with the correct action based on context, potentially without users even needing to have the app installed.

As Siri’s features become more powerful, I predict that Apple will start to leverage the actions more and more, potentially even handing off the interaction entirely to Siri.

Take the Parc Asterix app for example – my ideal user experience is that my phone knows when I’ve arrived, checks my emails for any tickets that I already have and presents the option to buy them natively (no downloading apps) when I don’t. When I’m inside the park, I want it to provide me with easy access to an App Clip which contains a map and ride waiting times. But then I want to be able to leave and not have yet another app that won’t be used for years.

Apple’s headstart

It’s easy to point at Siri’s chat functionality and suggest that Apple is falling behind, but I think the reality is quite different. Apple has spent almost a decade building AI tools that seamlessly integrate with the operating system. They have the greatest level of access to information possible because, for most people, our lives live within our phones. I want to see Apple leveraging that and integrating AI throughout the OS to work for me and make my life that much easier.

Where the likes of rabbit have been working on Large Action Models for the past year, Apple has been at it for a decade.

I do hope that Siri’s chat functionality gets a lift this year, but I don’t think that should be the focus, I want a device that anticipates me and understands what I need to make my life easier. Apple, more than anyone, is able to deliver on that.>